c03360 No.158

Information Science Training

Getting a firm understanding of information science, and specifically, information entropy, provides essential context about the structural limitations of artificial intelligence. While knowing the basics of information science assists with understanding contemporary cryptography, understanding how information relates to energy broadens your horizons and allows you detect epistemological gaps in machine learning processes. For example, information inputs into a system correlate to an increase of energy within a system. This is true for black holes, computer networks, machine learning processes, neurology, and cognition.

You can review the full Milanote on this section here: https://app.milanote.com/1FjKKO16uYDY9I/information-science

Challenges

- Understand the basic composition of information.

- Learn how we measure information.

- Follow up on the details of how information entropy works

- Discover the ramifications of information entropy limitations.

____________________________

Disclaimer: this post and the subject matter and contents thereof - text, media, or otherwise - do not necessarily reflect the views of the 8kun administration.

Post last edited at

c03360 No.159

HookTube embed. Click on thumbnail to play.

https://www.hooktube.com/watch?v=_lNF3_30lUE

Atoms are, simultaneously, stores of state and computational units. They carry data and, because of their energy, they can transform that data. How atoms are arranged is called information. Coal and diamonds have the same number of atoms, but the information on how the atoms are aligned fundamentally changes the elements behavior.

The behavior of atoms are governed by the very counter-intuitive rules dictated by quantum mechanics. These rules explain vital limitations that, if understood and, provide great insights on how to exploit key parts of information theory.

Information and energy have a relationship via thermodynamics, which we will explore in later videos.

Disclaimer: this post and the subject matter and contents thereof - text, media, or otherwise - do not necessarily reflect the views of the 8kun administration.

Post last edited at

c03360 No.160

HookTube embed. Click on thumbnail to play.

https://www.hooktube.com/watch?v=Da-2h2B4faU

My biggest beef with string theory is that it is background dependent and the ability to test it's conclusions are very limited. Nonetheless, it is important to understand what it proposes even if it is ultimately incomplete.

Atoms themselves are made up of elementary particles, described by the Standard Model. We utilize a large amount of energy to interact with these subatomic particles and, in doing so, alter them. This means we change the state we are trying to observe, making precise measurements of state impossible. This lack of precision is known as uncertainty

The idea of string theory was to unify quantum mechanics with gravity, but it requires an explosion of dimensions (Up to twelve in some cases) to be consistent. Our geometric universe exists in four dimensions.

The fundamental problem of uncertainty shows that information can be represented in two manners: one as discrete packages of details and a statistical approximation. As we will discover in later videos, the discrete packages of bits used in computers to day actually derives from the statistical approximations!

Disclaimer: this post and the subject matter and contents thereof - text, media, or otherwise - do not necessarily reflect the views of the 8kun administration.

Post last edited at

c03360 No.161

HookTube embed. Click on thumbnail to play.

https://www.hooktube.com/watch?v=IXxZRZxafEQ

Photons is the smallest amount of transportable information. These are, simultaneously, discrete packets of information and statistical approximations of information.

As the state of an atom's electron changes (as the atom performs the equivalent of computation) it emits a photon. That photon has information about the original atom encoded within its fields.

The speed by which this information travels abides by rules known as relativity. The delay between the origin of a photon and its observation is vital to all cognitive processes. If the information within a photon was consumed too slowly, the observer would be operating at a significant delay, much like a how lag affects gaming.

Even with light moving as fast as it does, it takes 200 milliseconds for light to enter the eye and register in the conscious awareness of a human. This delay varies in animals and robotics.

All of this information abides by the rules of entropy as well.

Disclaimer: this post and the subject matter and contents thereof - text, media, or otherwise - do not necessarily reflect the views of the 8kun administration.

Post last edited at

c03360 No.162

HookTube embed. Click on thumbnail to play.

https://www.hooktube.com/watch?v=p0ASFxKS9sg

Complex systems, organic or otherwise, relay on information transmission and comprehension. Our physical mass alters the energy of atoms, causing them to bounce into one another (chemical, sonic) or change their electron states (photons), encoding information about not only the atoms themselves, but also the orchestrated source of that communication.

We constantly consume, measure, and compare this information. This information can be measured as bits (or as "units of surprise") and upon doing so, we begin the process of approximating to measure future information.

This metrics of information describes a discrete unit that is derived from a statistical approximation of potential yes/no outcomes. Nearly all types of information can be represented by this yes/no analysis.

Disclaimer: this post and the subject matter and contents thereof - text, media, or otherwise - do not necessarily reflect the views of the 8kun administration.

Post last edited at

c03360 No.163

HookTube embed. Click on thumbnail to play.

https://www.hooktube.com/watch?v=musBo7Kafic

Naively sending complex streams of information as energy through elaborate mediums exposes the information to a tremendous amount of external influence. This alters the information by increasing the presence of noise. This increase of noise makes the information more difficult to evaluate. To get around this problem, we can use timed impulses instead of continual streams.

There are a wide variety of ways to send such impulses, and through much experimentation, you can discover the most efficient ways to transfer the most amount of information in the least amount of time. Observing the statistical likelihood of information gives you an indication of what bias the source is influenced by, and you can represent those statistical likelihoods discretely.

Disclaimer: this post and the subject matter and contents thereof - text, media, or otherwise - do not necessarily reflect the views of the 8kun administration.

Post last edited at

c03360 No.164

HookTube embed. Click on thumbnail to play.

https://www.hooktube.com/watch?v=WrNDeYjcCJA

Clever usage of time delays can help us convey complex information in environments of high noise. For example, the polybius square combined two sets of five torches, lit in different orders, to represent one of twenty four characters. Over our history, we've experimented with different ways to convey more information over greater distances in less time.

We've learned how to encode information about ourselves into increasingly lengthy sequence of impulses changing over time. For this impulse communication to work, we must apply agreed upon limitations to the message space, which in turn imparts a statistical bias upon the messages within the space.

Disclaimer: this post and the subject matter and contents thereof - text, media, or otherwise - do not necessarily reflect the views of the 8kun administration.

Post last edited at

c03360 No.165

HookTube embed. Click on thumbnail to play.

https://www.hooktube.com/watch?v=Cc_Y2uP-Fag

As we began to apply mechanical and electronic to impulse-based communication, we quickly discovered limitations of transmission. Enough time must be allowed for an impulse to register as the correct symbol on the receiving end. Even with high-quality equipment that allows for rapid impulse dispatching, the power and consistency of a signal must be higher than the amount of noise the equipment also carries with it.

Symbols are the representation of a signal-constructed state that persist for a necessary minimum of time. This symbol-to-noise ratio problem is manifests itself in a very similar, but different manner in machine learning and neurons.

Disclaimer: this post and the subject matter and contents thereof - text, media, or otherwise - do not necessarily reflect the views of the 8kun administration.

Post last edited at

c03360 No.166

HookTube embed. Click on thumbnail to play.

https://hooktube.com/watch?v=X40ft1Lt1f0

To achieve optimal transmission time when sending large amounts of impulses to a receiver, we need to figure out the minimum and the maximum numbers of impulses we have to send. From here, we can apply an agreed upon coding methodology to convert the signals into symbols.

As you can see, the simple act of sending impulses over time to transmit information has efficiencies rooted in discovering the biases of the information source. This is how MP3s and JPEGs work: They exploit the sensory limitations of the ear and the eye and only encode the information that you can perceive.

Disclaimer: this post and the subject matter and contents thereof - text, media, or otherwise - do not necessarily reflect the views of the 8kun administration.

c03360 No.169

HookTube embed. Click on thumbnail to play.

https://www.hooktube.com/watch?v=o-jdJxXL_W4

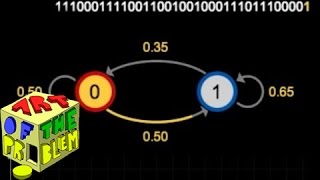

When dealing with the evolution of impulses over time, there is a strong statistical changes that the unknown signal that you are about to receive is dependent upon a previous signal. This dependency of signal evolution distribution can be mapped via a Markov chain.

You can analyze all occurrences of a specific signal in a stream and count the occurrence of the specific signals immediately preceded them. From here, you can assign a statistical chance of one signal leading to another (Or to itself).

No matter the permutation of statistics, state, or when you start and stop a Markov chain, the outcome will always converge at a specific ratio, and thus, the foundation of information entropy is born.

Disclaimer: this post and the subject matter and contents thereof - text, media, or otherwise - do not necessarily reflect the views of the 8kun administration.

Post last edited at

c03360 No.170

HookTube embed. Click on thumbnail to play.

https://www.hooktube.com/watch?v=3pRR8OK4UfE

If we take everything we've learned about impulse-based communication and the probability of statistical distributions and apply it to words, we start to notice a very interesting pattern.

Grammar has rules to enforce coherence of a statement, so the words that can follow any previous word must be significantly limited. Representing these limitations as statistical values in a Markov chains allows us to create increasingly grammar-compliant statements made entirely at random.

Claude Shannon discovers the most critical part of Gnostic Warfare: the amount of information encoded in a message is dependent upon the structural bias of the message generator. This key limitation brings us to information entropy.

Disclaimer: this post and the subject matter and contents thereof - text, media, or otherwise - do not necessarily reflect the views of the 8kun administration.

c03360 No.171

HookTube embed. Click on thumbnail to play.

https://www.hooktube.com/watch?v=R4OlXb9aTvQ

Information entropy measures the amount of information a message has by determining the amount of questions you have to ask to correctly guess the next unknown symbol. In addition to binding information volume to surprise, we are also able to determine the bias of the symbol-generating source.

For example, when the probability of one symbol appearing is equal to the probability of any other symbol appearing, this is very strong indicator that the signal source is trying to hide information by making every symbol maximally uncertain..

In robotics, AI, and biological neurology, the occurrence of the symbols they generate will not be anywhere near maximum uncertainty. (Sans a layer of AES encryption masking it) This intentional lack of maximal uncertainty is a heavy indicator of innate structural biases in how each being manages their symbols of reality.

Disclaimer: this post and the subject matter and contents thereof - text, media, or otherwise - do not necessarily reflect the views of the 8kun administration.

c03360 No.172

HookTube embed. Click on thumbnail to play.

https://www.hooktube.com/watch?v=0mZTW88Q6DY

As the entropy of an information source becomes more predictable, the ability to represent the information it generates in a smaller message space increases.

If the entropy of an information sources becomes less predictable, the ability to represent the information it generates in a smaller message space decreases.

This means that if an information source puts our less information while its numbers of observers increases, this is because its entropy has become more predictable, allowing it to send out coherent messages involving fewer symbols.

This behavior helps categorize the social efficiency of a communicative being.

Disclaimer: this post and the subject matter and contents thereof - text, media, or otherwise - do not necessarily reflect the views of the 8kun administration.

c03360 No.173

HookTube embed. Click on thumbnail to play.

https://www.hooktube.com/watch?v=cBBTWcHkVVY

As messages achieve maximum compressibility based on the entropic bias of their source, external noise can significantly interfere with the decompressed message. Adding parity bits for each segment of symbols helps the receiver understand what to expect from a collection of symbols.

DNA error correction after mitosis performs a similar and much more robust version of this expectation confirmation. In theory, neurons should be performing a similar task regarding symbol management.

An interesting side effect of this concept allows you to measure the what kinds of noise environments an information source has been biased to survive in. As noise increases, more resources must be allocated to creating and confirming parity. High parity to confirm a message means the information source has been designed to communicate in a very chaotic and very noisy environment. DNA is an example of a communication methodology designed to ensure highly chaotic environments.

Disclaimer: this post and the subject matter and contents thereof - text, media, or otherwise - do not necessarily reflect the views of the 8kun administration.

c03360 No.174

HookTube embed. Click on thumbnail to play.

https://www.hooktube.com/watch?v=BU1Y0OrXTxE

Recall that, in quantum mechanics, information is the state of some vector (atom, photon, etc) The particle has a state and to measure it, you have to fire a photon at it. The state of the photon is altered by the state of the particle. You extract the state of the particle by inferring how it altered the state of the photon.

We know we can derive information about the state of a particle, but can we destroy information? Meaning, if we disassemble the way the atoms of an element are arranged, did we destroy the information of that element? No. The information is still exists. In order to preserve reversibility, information cannot be destroyed.

So not only does information carry the structural bias of its source, but when we apply this entropic property upon particles, we conclude that the structural bias can never be destroyed as well.

POSTULATE: Because of the uncertainty principle, every particle in the universe has at least maximum information entropy as its lowest possible entropy. Meaning, the observed state of one particle has the same chance of occurring as any other observed state. This tells us something about the structural bias of the particle. From a cryptographic analysis, every particle in the universe is a highly secretive agent that is intentionally hiding itself from everything else. If this postulate is true, it may be because any biasing that favors one state over another may result in the destabilization of the information that makes up the particle.

Disclaimer: this post and the subject matter and contents thereof - text, media, or otherwise - do not necessarily reflect the views of the 8kun administration.

c03360 No.188

HookTube embed. Click on thumbnail to play.

https://www.hooktube.com/watch?v=yWO-cvGETRQ

The last video introduced the idea of information conservation. This video talks explains how information is conserved even in the most seemingly destructive of scenarios: entry into a black hole.

As we've learned, information carries the structural bias of its source, within both the discrete impulses themselves and within the flow of those impulses. If information can be destroyed, then we have to throw out everything that depends upon the third law of thermodynamics. Because of these repercussions, there is tremendous amount of pressure to preserve mathematics as is and find a way to ensure that information cannot be destroyed in scenarios such as blackholes.

This leads us to two outcomes: information cannot be destroyed because it is either hidden or preserved. There are known problems for hiding local information explained in the No-Hiding theorem (https://en.wikipedia.org/wiki/No-hidingtheorem) but there are clever but incomplete workarounds to that, including Pilot Wave theory (https://en.wikipedia.org/wiki/Pilot

wave_theory) and the Fecund Universe postulate. (https://en.wikipedia.org/wiki/Lee_Smolin#Fecund_universes)

This brings us to one of the more complete conclusion: information is preserved, even in a black hole. As information is added to a black hole, it grows in diameter. Because of time dilation effects of a black hole, as matter falls into it, the frame of reference shifts for the matter and outsiders witness the matter slowing down until it stops completely. In essence, the information about the matter becomes “encoded” onto the surface of the blackhole while the matter continues to fall into the blackhole. This means that when a black hole radiates away its energy, the energy can carry away the information on the surface of the black hole as well, and thus, preserving information and not destroying it.

This is all rather complicated, and I recommend supplementary reading on the subject. This information preservation and, specifically, the surface encoding part, leads us to the Holographic Principle.

Disclaimer: this post and the subject matter and contents thereof - text, media, or otherwise - do not necessarily reflect the views of the 8kun administration.

Post last edited at

c03360 No.211

HookTube embed. Click on thumbnail to play.

https://www.hooktube.com/watch?v=9dx0TiFdwNs

Holograms encode 3D information into 2D information in a lossy manner. If you add one bit of information into to a black hole, the surface of the black hole grows by one square Planck unit. This exposes a strangeness about the black hole.

If you take a box and fill it with objects, the capacity of how many objects the box can hold can be measured in advance by multiplying the length, width, and height of the box. However, when dealing with how much information a black hole can hold, you don't measure the volume of the black hole. Instead, you measure the diameter. This means the information capacity of a black hole is determined by its surface area, not its volume. This would be like saying the physical capacity of the box is based on the writing on the outside of the box.

This means all 3D information within a black hole is encoded onto its 2D surface/hologram.

The holographic principle is a mathematical model of convenience. In translating a 3D universe into a 3D hologram, the concept of gravity goes away, which helps isolate the behavior of gravity via comparison.

Disclaimer: this post and the subject matter and contents thereof - text, media, or otherwise - do not necessarily reflect the views of the 8kun administration.