85a843 No.494745

A place for codefags to make the chans searchable.

8e345d No.494816

>>494745

ctrl-f as in fagg0t like 0p

85a843 No.495005

Posts from #608

>>493751

>>494228

>>494299

>>494080

>>494202

>>494015

>>493888

>>493884

>>493882

>>493881

>>493886

>>493919

>>493939

>>493854

>>494489

>>494264

>>494457

>>494503

>>494460

>>494405

>>494451

>>494528

>>494471

>>493877

>>493898

>>493929

>>494283

>>494184

>>494548

1eba68 No.495890

One further comment from a heavy database user for what it's worth:

If we had a list of 'tags' that anons could enter as they post (in a specific format, e.g. preceded by **) covering topics that emerge (such as 'mkultra', 'bridge' etc. - related to topics brought up by Q) when searching through the data it would serve as a way to link crumbs by subject and as an additional variable / filter in any search would serve to streamline any search.

these would have to be moderated by BV / BO / Baker; would not be any more work than creating the notable posts per bread, although would be useful to find a way to insert them after the post identifier to create the link (i.e. >>xxxxxx within/2 **xxxxxx = TRUE).

Historical would be an issue but if there were some way of batch-adding at data assimilation stage based on linked crumbs, as well as specific 'meta-moderators' as we run searches etc.

However it might work - principle is an easily assignable value to identify crumb subject based on Q's topics so more information can be retrieved via regular search.

85a843 No.496431

>>495890

>One further comment

Well, you kind of lost me pretty quickly. Correct me if I'm wrong, but what you're suggesting is for posts going forward, and posts that are Q centric.

My goal is to see ALL of the board searchable because much of the digging and research that was collected was not just related to items Q had in mind, but many ancillary topics and evidence discovered would help build the "parallel construct".

That's what I see as important, your thoughts?

91c771 No.496858

Might I suggest using SQLite as the DB for the"file format". It's a single file db that performs well for read heavy workloads, is single file, so easy to distribute, easily usable from PHP and just about any other programming language, and could easily be used to load a regular server based db (obv depending on how the schema is designed). Also multi-platform, so should keep everybody happy irrespective of what OS you use.

91c771 No.496999

>>496858

I forgot to mention, SQLite also supports full text indices via the ft4 virtual table type

6a8d0c No.497972

I made a thread a few minutes ago asking if a wiki could be a good format to organize findings? Could help with navigating. What do you think?

>>497784

dbb4a4 No.498755

>>495005

I've been working on exactly this. I'm pulling the catalog from ga & qresearch. Finding the research general threads and saving those with q posts. Only goes back to about 2/15 when I turned the machine on. Currently working on getting old posts reconstructed. 99% sure I can grab all breads from 8ch.

C# dll to scrape q posts and threads from 8ch. 8ch+ json format but could be serialized XML I guess

4073db No.499327

One of the anons from the other thread.

I'm not going to jump in too much if other's are doing something where we end up stepping on Pepe's toes. Couple of thoughts though…

- Full text searches/indexes can be garbage. Only good for reserved words

- Most likely want this in a relational database. Creating the schema would consist of a really simple data model. Not even sure I would worry about normalizing it.

- Messages (body) could be stored in a blob and be searched with wildcards.

- Only looking at about 10-15 different queries tops. All simple SQL statements except for a couple that would need to be hierarchical..but still easy.

- I was thinking to use MySQL or SQL Server for the DB Engine.

- Biggest challenge will be the parsing of the threads and crumbs into a loaded format for the database. Once in a useful format…loading will be easy.

I see three main parts to this:

1) Getting the data so it can be loaded into a database.

2) Creating the database structures (really should be first)

3) Spitting out the queries, views, and sprocs that will be used. And putting a front end on it.

* almost doxxed myself and put a link to my web site…so close :-)

4073db No.499341

>>498755

>C# dll to scrape q posts and threads from 8ch. 8ch+ json format but could be serialized XML I guess

Good call me thinks

4073db No.499393

>>496431

I think that is a great thought. May be a good idea to just get one set started and loaded then look into the other boards.

We (at least I) can't see a way to search the 'board' itself, but to create a copy of the data in the threads and make those searchable.

7cdf2a No.501143

>>496431

>Download Chan.

>Host JSON of posts.

>Build simple interface.

>Use nginx as reverse proxy.

>??????????

Profit

Why the fuck do you want a DB when it's already JSON. FFS.

8dbdfa No.501166

>>499327

Open Source, Cross Platform search engine library - xapian.org

8dbdfa No.501352

>>501166

github .com/mcmontero/php-xapian JSON support and web-friendly middleware

7cdf2a No.501408

A better way to do this is to probably put everything client side. Make a cross platform application that just fetches new posts every so often. The browser is pretty perfect for this is we can set up a cross platform local server to host a local copy of qcodefag and this board.

Search

https:// github.com/bvaughn/js-search

Pros: Fast enough once index is built.

Cons: Have to build index, or send it from a server, ipfs, blockchain, whatever.

UI

rip it from qcodefag for q posts

Add 8ch layout to some button on qcodefag or some tab

Display the posts as normal, but add search bar for board side of new client for qcodefag and this board.

Pic related, it's easy to get .json formatted threads.

inb4 we all pwn ourselves.

7cdf2a No.501440

>>501408

Conveniently this also alleviates the clown issue should that garbage bill pass. I mean not really since we'll still own ourselves but fuck, we can try.

8143cc No.502752

>>502660

>>>502453

>

>>Research Threads Ideas. Please claim or create yours, let us know of more subject ideas

>

>>Quest for Research Searchability Thread

>

>>>494745 (You) (You)

>

>Thanks for including my thread. I'm not a coder so I'm not much more than a cheerleader. I am quite sincere in my belief that we have to make it all searchable. I'm not naive enough to expect a volunteer to tackle it. Without doxxing themselves, can any anon point me to a service or company that could accomplish this Quest?

8143cc No.502773

>>502660

>>494745 (You) (You)

Thanks for including my thread. I'm not a coder so I'm not much more than a cheerleader. I am quite sincere in my belief that we have to make it all searchable. I'm not naive enough to expect a volunteer to tackle it. Without doxxing themselves, can any anon point me to a service or company that could accomplish this Quest?

885f7e No.502802

>>502773

A pleasure anon. Here's wishing you all, all the very best in this noble quest. It would be Christmas for us all if you did it. GODSPEED.

8dbdfa No.502951

>>502773

The omega interface for xapian could do most work. wget to grab site data, json->csv converter to translate, and to be ready to go. All Free and open source software. Not quite plug and play, but a start.

Sample usage described:

xapian.org /docs/omega/overview.html

linode .com has very affordable linux shell hosting.

e4e2ff No.503420

Hey just had a thought but couldn't /ourguys/ look at all bullets ( like they have to on a crime scene )?

Wouldn't "LIPPEL" the one who had been "grazed" be able to connect bullet to her dna with whatever DNA would be on her?

What about the other student who was walking after being shot in both legs by 4 rounds?

Where is the DNA for that match to bullet?

What about the dead coach, the HERO we seen at the funeral? DNA match to that?

All this stuff might not help us ATM but IMO,

would play a big handle in the game out there with Q and friends?

https:// www.youtube.com/watch?v=cPvYxTa1ph4

https:// www.youtube.com/watch?v=cPvYxTa1ph4

https:// www.youtube.com/watch?v=cPvYxTa1ph4

LIPPEL

Another thing, this video she talks about how "BREAKING THE GLASS WITH SHOTS" starting at @ 2:05.and then she says they arrived..

MAYBE AN HOUR AFTER

She then states at the end of the video then she states the "Swat team/Police" was on the ground, she aid they were banging on the doors to let them in, she "DIDN'T TRUST IT WAS THEM, BECAUSE THE POLICE WERE BANGING ON THE DOORS - NOBODY GOT UP"

==IF THE SHOOTER DRESSED IN FULL METAL GARB SHOT OUT HER WINDOW, SHE WOULD OF SEEN IT BEING POLICE, AND THEY WOULD OF SEEN HER.. AND THEN PROBABLY OPENED THE DOOR THRU THE BROKEN GLASS INSTEAD OF BANGING ON THE DOOR WOULDN'T THEY ?"

Whole story right here in the video proves it was either a False Flag or some type of fuckery

1ca2ba No.503685

>>502773

It's not really a company, but wouldn't the person running the 4plebs archive be a good place to look for tools/code in this quest? Maybe he'd even be willing to assist? The site uses some fairly powerful search tools for certain halfchan boards already. I'm not a codefag so I apologize if this hasn't been suggested already.

https:// archive.4plebs.org/_/articles/faq/

8143cc No.503938

>>503685

That's a good suggestion. Do you know off the top of your head how many archive sites have been used at 4ch and 8ch? I know about archive.is and 4plebs, but I've seen a lot more. I'm pretty sure the threads are scattered about the internet.

912746 No.504261

>>495890

What about a bulletin board type of system like vbulletin for example? built in search and different forums and sub forums for topics.

8143cc No.505181

>>504748

>>505007

Thanks, I'm sure there's a lot of good stuff here, I monitor them daily. Are you implying posts have been excised from threads and posted in these subs?

8143cc No.505196

>>505181

> excised from threads and posted in these subs?

Sorry, didn't finish my thought, and they might not be captured in a search of posts in Qresearch? Not sure why you posted these.

ec7b2a No.506133

In naval warfare, a "false flag" refers to an attack where a vessel flies a flag other than their true battle flag before engaging their enemy.

It is a trick, designed to deceive the enemy about the true nature and origin of an attack.

In the democratic era, where governments require at least a plausible pretext before sending their nation to war, it has been adapted as a psychological warfare tactic to deceive a government's own population into believing that an enemy nation has attacked them.

In the 1780s, Swedish King Gustav III was looking for a way to unite an increasingly divided nation and raise his own falling political fortunes.

Deciding that a war with Russia would be a sufficient distraction but lacking the political authority to send the nation to war unilaterally, he arranged for the head tailor of the Swedish Opera House to sew some Russian military uniforms.

Swedish troops were then dressed in the uniforms and sent to attack Sweden's own Finnish border post along the Russian border. The citizens in Stockholm, believing it to be a genuine Russian attack, were suitably outraged, and the Swedish-Russian War of 1788-1790 began.

In 1931 the Japan was looking for a pretext to invade Manchuria. On September 18th of that year, a Lieutenant in the Imperial Japanese Army detonated a small amount of TNT along a Japanese-owned railway in the Manchurian city of Mukden.

The act was blamed on Chinese dissidents and used to justify the occupation of Manchuria just six months later. When the deception was later exposed, Japan was diplomatically shunned and forced to withdraw from the League of Nations.

In 1939 Heinrich Himmler masterminded a plan to convince the public that Germany was the victim of Polish aggression in order to justify the invasion of Poland.

It culminated in an attack on Sender Gleiwitz, a German radio station near the Polish border, by Polish prisoners who were dressed up in Polish military uniforms, shot dead, and left at the station.

The Germans then broadcast an anti-German message in Polish from the station, pretended that it had come from a Polish military unit that had attacked Sender Gleiwitz, and presented the dead bodies as evidence of the attack. Hitler invaded Poland immediately thereafter, starting World War II.

http:// www.bibliotecapleyades.net/sociopolitica/sociopol_falseflag29.htm

For hundreds of links to FF research/reports, use this link below. You are welcome Anons..

http:// www.bibliotecapleyades.net/sociopolitica/sociopol_falseflag.htm

8143cc No.509646

>>503685

>person running the 4plebs archive be a good place to look for tools/code in this quest?

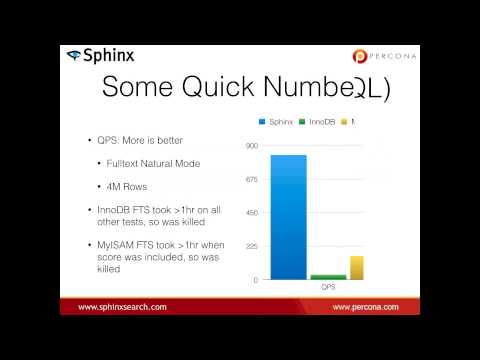

For the archives 4plebs uses sphinx search (http:// sphinxsearch.com/). It's used to index from the database and display search results very quickly.

Easy to implement but I would say it's worth it only if you have a lot of data to search through. For smaller datasets you can use full text search included in a regular database engine.

Also you can take a look at other search engines like Solr (http:// lucene.apache.org/solr/) and elasticsearch (https:// www.elastic.co/)

af8c7d No.510581

>>509646

been using duckduck for searches

af8c7d No.510592

cryptocert keys moded on puter… should i reboot or undo?

9176e6 No.519706

YouTube embed. Click thumbnail to play.

>>509646

I also would Second the Idea of using Sphinx - it can be connected to a currently live database and given clues and sample queries to Index all text in the DB - https://

www.percona.com/resources/technical-presentations/how-optimally-configure-sphinx-search-mysql-percona-live-mysql and they have a video. I don't think there are any existent Docker setups to play with, although I imagine 8ch is quite custom anyway.

dbb4a4 No.520068

>>499327

>>499341

OK So I think I've got my chanscraper console app working as designed.

AFAIK, I've got all the QPosts in a single JSON, I've got complete breads starting with Bread #364 2018-02-07. That's as far back as I've been able to reach programatically. Each complete bread has also been filtered into another json file containing just Q's posts.

The complete breads have only come from 8ch. The chanscraper is set up to whee it could scrape 4ch as well - assuming the json is still available.

I'm showing 825 QPosts - 1 more than qCodeFag because I believe I have a deleted

one. All counted it's 210 threads.

I've done all the hard work of setting up the old catalog/threads/posts. Its set up where you can specify how far back to refresh (to cut down on unnecessary http gets), It reads in the existing data, finds the new threads to search for on 8ch/greatawakening and 8ch/qresearch, and then archives the threads/posts that q has made locally.

If anybody wants the full Q archive as I have it now, here it is: 6mb https:// anonfile.com/H6B7G7dcbc/QJsonArchive.zip

I'm going to integrate the DJTweets + minute Deltas in this week.

Once I get this all cleaned up I'll cut it loose on Github if there are any C#codeFags interested.

My idea is to set up a simple HTML page using some javascript that can be run locally on a single users machine or website. Since the scraper is a C# dll it could be set up to run as a timed service on a web server to keep a site up to date.

98bd4e No.520151

>>520068

Code at github.com/anonsw/qtmerge does some similar things. Check it out, maybe there are some useful ideas to lift from there: anonsw.github.io

dbb4a4 No.520179

>>520151

Yeah I knew about that - but I'd already been getting data from QCodeFag. The QCodeFag data was the basis for what I have now since it had already done the scraping on 4ch. I wanted my own in C# source going forward that I can use locally with my other C# code.

7cdf2a No.520183

I don't know why nobody cares but it's trivial do download threads, posts, and boards through the 8ch api in the form of JSON. There is no reason to not have the local client make the get request every so often.

dbb4a4 No.520193

>>520183

Yep. That's why I did it. Getting all the JSON is easy once you know where everything is - but stuff sliding off the catalog was what made me want to keep a local archive.

7cdf2a No.520201

>>520193

I meant the hypothetical client with which people are searching this board and staying updated. That client should search for posts all on it's own instead of relying on a single source of truth. (saves infrastructure money too)

dbb4a4 No.520237

>>520201

Precisely.

Once I get it finished I'll provide a single HTML page that is like QCodeFag. View on your desktop.

Run the chanscraper then view the HTML to see new posts

98bd4e No.520263

>>520179

Cool, check out qanonmap too for posts no longer retrievable. I think they have some that qcodefag doesn't have.

dbb4a4 No.520278

>>520263

>qanonmap

Whazza qanonmap url?

https:// qanonposts.com/ ok?

98bd4e No.520298

>>520278

github.com/qanonmap

qanonmap.github.io

not sure if thestoryofq.com is related

But they are qcodefag forks.

dbb4a4 No.520323

>>520278

>>520298

Duh. I had it.

I noticed that qanonmap.github.io has 827 posts and qanonposts.com has 824.

That's going to cause my OCD great consternation.

98bd4e No.520373

>>520323

Yep, but I think new ones just haven't been added yet to qcodefag.

dbb4a4 No.520391

>>520373

Hmm.. That doesn't help me - I've got those. I'm only showing 825

07564d No.524371

>>494816

Ctrl-f is only good on a single thread. What researchers really need is a way to access the entire set of Q posts. I've built that capability for myself locally by parsing ctrl-s saves of the threads into a MySQL database and running SQL searches on that.

The best bet for a public search engine might be to cooperate with CodeMonkey to build a search capability for the boards. We'd still have to search each board separately, but at least we would be able to search each board all at once.

I've got most of the Q related posts from 4chan and 8ch locally, but I'm not sure how to make that much data publicly available. I've also got a fair amount of PHP code that I use to access and organize the raw data. I'd be willing to share it if I had a place to do it.

07564d No.524384

>>493751

Actually, I have had chan posts show up in browser search engine results, but I know this isn't what you're after. I've built the type of search capability you're after on my local machine. It still takes a lot of time to work with the posts, but it's definitely easier than anything we can do at the original sources.

07564d No.524395

>>494228

Timeline is easily generated when one has the ability to set the post time to something other than the current time. That's how I create timeline posts in my own database.

07564d No.524431

>>494015

I definitely appreciate that notable posts are included in the breads on each thread. It isn't necessary for them to be updated on each and every thread, but it is good to have them updated at least every day. Right now, I'm using the links in the bread posts to mark posts in my private database as being included in the bread. Given the volume of posts that I am now working with, these links make it easier to determine what is important to include.

07564d No.524456

>>494503

I use PHP because it's free. *shrug*

07564d No.524489

>>494471

If you're lucky, you can find your archives on archive.org. That site saves pages with about nearly the same HTML elements as the original page. Archive.is converts the classes used on the original page into their style equivalents, making for a parsing nightmare. When I've had to use the archive.is version of a page, it was a painstaking process to recreate the single post that I went to the archive to get. My parser code can parse the archive.org archives the same as the original, so it's easy to get all posts from that archive.

07564d No.524503

>>493929

I've already done this. I'm willing to share my data structures and parsers, if I have a place to do it.

07564d No.524511

>>495890

I've got tagging fields included in my data structure. Getting them filled is an entirely different matter. I've got a tool to help do it more efficiently than phpMyAdmin, but it needs a bit of work to make it just a bit more efficient so that more than one post can be updated in one pass.

07564d No.524530

>>504261

The challenge is classifying the posts to determine which sub forum to direct them to. Not trivial.

07564d No.524543

>>509646

There are over 750,000 total posts from both sites and all boards containing Q related posts. It's a large data set now.

838074 No.524965

Why not just build a 4chan archive site? That's the main thing lacking from 8ch.

7cdf2a No.525489

Literally just build an index of tags and use fucking client side javascript. Muh databases. Jesus Christ people. You could even let users share tags.

First one with a completed project wins. Peace.

dbb4a4 No.525531

>>524965

https:// 8ch.net/qresearch/archive/index.html

dbb4a4 No.527353

>>520237

Here's the archive again + a handy HTML page that you can use in your browser to view the archives locally. Works fine in Chrome and IE. Readme included.

https:// anonfile.com/W3f5H6d8be/QJSONArchive.zip

838074 No.529626

>>525531

OK, so why not do the fashionable, continuous integration FOSS thing and add searching to the archive site at the repo?

dbb4a4 No.530101

>>529626

I expect because 8ch is not a massive corporation with a bunch of resources at their disposal. /sudo/

838074 No.530155

>>530101

What difference does that make? Anons are gathered here. Why don't they just go there to assist in development instead of fragmenting and branching out to 1000 directions? Consolidate, integrate, then diverge.

6db142 No.530474

>>524431

>links

If it's server based something like http:// arborjs.org/ For data visualization/selection would then fix the mapping problem and help a lot with the search problem.

>links

There's also the Open Visual Thesaurus project to maybe grab code/ideas from www.chuongduong.net/thinkmap/ to view the data search and what else might be related to walk through the data.

dbb4a4 No.530677

>>530283

Here's a newer local archive that moves there.

I've put in some UI enhancements to the JSON Viewer HTML page. Seems to be working good. With a slight mod it could work with local json from any QCodeFag site or even direct from 8ch.

https:// anonfile.com/5ercH3d9ba/QJSONArchive_v1.zip

Getting the posts into 2 columns should be no problem. It's getting a reliable news source that is gonna cause you trouble.

I was planning on putting 3 columns in the viewer, QPosts, Times, DJTweets. In doing all this I've discovered a few things about 8ch/halfchan. The post id's are not guaranteed unique. The best unique key is time and I've found 2 posts that dropped at the same timestamp. Thematically I've been trying to key everything to time. [qposts, tweets, news]

dbb4a4 No.530720

07564d No.530920

>>530474

yEd can produce maps from spreadsheet data. That's one I know of.

https:// www.yworks.com/products/yed

Maybe when I get further along in the post tagging work, it'll be useful.

I'm toying with the idea of making my raw data available in some way, possibly in read only format. (Clowns can be destructive.)

07564d No.530978

>>525489

I would like to be able to allow others to tag posts in my database. Any ideas on how to keep clowns from shitting everything up?

My initial thought is to allow suggesting of tags (similar to comment logic in the blog) with moderators making final decisions on them.

07564d No.530994

>>530920

One of the big reasons I hesitate in making the entire database available is because a few of the images uploaded into the threads are obscene. I have no desire to inadvertently public that sort of thing. When I'm publishing a reviewed subset, the chances of that happening are low.

00c874 No.532910

>>502773

Perhaps?? just a guess.

Half Past Human .com

Absolutely the capability!

Discretion and interests match? Dunno.

00c874 No.532931

>>499327

Is there an interest in pre-selecting data?

For example, select only posts identified on "notable posts" lists from each general #.

Plus, of course, any to-from links on those selected, chained.

Just asking. DB size, usability, etc.

Or is the data set also for researching shill/troll themes? It is a possibility, so I ask.

07564d No.534887

>>532931

I'm working on that right now. I got started on this a week or so ago. I wrote a bit of code to travel back through context links, too. Hopefully, in a few days, I'll be able to repost my blog with the results of this work.

07564d No.534908

>>534887

A bit more to say about that:

It's my plan to include items that reach back to a Q post together with that Q post when I can identify such. I may do a little pruning to keep the length of the entry associated with a Q post under control. Not everything in a context thread is important, after all. I may have to think about further arranging of things. I'll think more about that as I get closer to a point where I can implement such a strategy.

dbb4a4 No.536855

>>520323

>>520373

So I managed to find the missing drops. My archive now has 827 total. As it turns out, the scraper was working as designed, filtering out Anonymous posts. The missing 2 for me were #823 and #819 when Q's trip wasn't working.

8143cc No.538741

>>532910

>Half Past Human .com

Wow. That's a new one to me.

8143cc No.538775

>>524543

>There are over 750,000 total posts from both sites and all boards containing Q related posts.

Yes, and that's the challenge. Making the Q "related" post searchable. Making Q's posts searchable is arguably not as important as making the body of related posts searchable as that's where the body of knowledge resides.

"You have more than you know" taunts us with its promise. We get pointed to Loop Capital, or Stanislav Lunev. We need to be able to search/aggregate all of the posts over weeks/months with a single search. The dedicated research threads are great as far as they go but we're missing a lot of other info posted as snippets.

8143cc No.538787

>>530994

>few of the images uploaded into the threads are obscene.

That does complicate it, but a lot of the information in the Q "related" posts is graphic. It seems culling of obscene content would need to be done manually to avoid throwing the baby out with the bathwater.

98bd4e No.540555

>>536855

Good catch. I found some in my db as well.

I like the post headers in the UI. Nice and clean.

838074 No.541964

>>536855

Yeah, qanonmap has had all of those for over a week now…

dbb4a4 No.543389

>>540555

What is everybody using as their sources for drops? 8ch? One of the QCode forks? Something else?

How do we verify that our collections are the same?

I've been adding a Guid for each post I scrape, just to give them all a unique value.

98bd4e No.545176

>>543389

qtmerge uses the raw JSON/HTML data where relevant from 8ch, 4plebs and trumptwitterarchive as it's source data. It also merges in the JSON from qcodefag/qanonmap. It currently uses the host, board, post timestamp and post number to sync.

I like the idea of matching the GUIDs along with a post hash using some method we agree on.

dbb4a4 No.547789

>>545176

Oh shit. Qtmerge is scraping HTML pages? You are dedicated. I sourced stuff from qcodefag that I couldn't get json for.

Do you have the full bread sources?

dbb4a4 No.547826

Phonefag right now.

>>545176

>>547789

There's an md5 field as you know in the 8ch json, but it wasn't in the data I got from Qcodefag. Because he'd modified the .com to strip HTML into a.text field.

My chanscraper keeps the md5 and the .com and strips HTML into .text.

Any C#fags here?

I did set up a GitHub yesterday and push the chanscraper out. Gonna get the Twitter stuff mashed in the next few days.

dbb4a4 No.548084

>>547826

Just ran my chanscraper again since apparently there were new posts last night as I was jacking around with Github.

I checked my posts with what's on qresearch and I think I'm good. Showing 839 total now.

New Q posts from 828 - 839.

I found a bug in the ChanScraper code too. A thing I've been working on that I forgot to remove. I'll push it out too and then link the GitHub.

dbb4a4 No.548229

>>548084

Here's the link to my new GitHub

https:// github.com/QCodeFagNet/SFW.ChanScraper

If you are going to run the ChanScraper and then view the posts locally, when you open the QJSONViewer.html page, don't open the [json\_allQPosts.json] file, open the newly generated [bin\json\_allQPosts.json] file.

The machine needed me to include all the existing posts/work json. It's kind of clunky the way I'm doing it because I want to keep this updated with the latest posts/work json. But for a normal user everything is kept updated automagically in the bin\json folders. The project is set up to copy new files if newer - so everything should be kept in sync.

If you are planning on running this locally you'll need the .NET framework 4.5 at least. Probably better to go with 4.5.2

https:// www.microsoft.com/net/download/dotnet-framework-runtime/net452

dbb4a4 No.548433

>>548229

You'll need Visual Studio free (at least) to build it unless you are a commandline master.

https:// www.visualstudio.com/vs/visual-studio-express/

98bd4e No.549377

>>547789

Only HTML of archive pages.

07564d No.549586

>>548229

Does your scraper work on the archive.is versions? These are the most complete most of the time since that is where so many of the pages were almost immediately saved by anons.

dbb4a4 No.550148

>>549377

Tedious Dayum. Think you could convert your full bread scrape into some json?

>>549586

Gotta link to one of the JSON files?

>>548564

Here's a mini local JSON viewer as an HTML page + allQPosts.json. @225KB

Includes all QPosts up to 2018-03-04T11:29:14

https:// anonfile.com/06HeJbdeb6/Mini_Local_JSONViewer.zip

I was just thinking that what we really need, to start off with is a single schema that we can all agree on. It will go a far way in interoperability.

I'm going to run some tests on my local QCodeFag install and see if it will work off of the ChanScraper _allQPosts.json file. I think it should.

The JSONViewer could work with straight files from 8ch or 4ch with a single minor change I forgot to put in.

dbb4a4 No.550167

>>549586

The ChanScraper includes the full JSON archive as of this morning. I haven't need to go back to any archive.is HTML archives because I've been collecting breads locally since the beginning of Feb. All the Q Posts before that I sourced from the QCodeFag forks.

dbb4a4 No.550218

>>550148

Here's what the JSON schema I'm working with looks like.

[

{

"source": "qresearch",

"threadId": 544266,

"link": "https:// 8ch.net/qresearch/res/544266.html#544985",

"imageLinks": [

{

"url": "https:// media.8ch.net/file_store/ffd6128f5949e4d4f6f3480236a63be002ffc5e59c0a31714360624d8ce45170.jpeg"

},

{

"url": "https:// media.8ch.net/file_store/ffd6128f5949e4d4f6f3480236a63be002ffc5e59c0a31714360624d8ce45170.jpeg/B42CA278-6C32-4618-A856-0CB9B680CC38.jpeg"

}

],

"references": [

{

"source": "qresearch",

"threadId": 0,

"link": "https:// 8ch.net/qresearch/res/0.html#548166",

"imageLinks": [],

"references": [],

"no": 548166,

"uniqueId": "19294a1b-8cae-435d-9503-8eb70c573d6b",

"_unixEpoch": "1970-01-01T00:00:00Z",

"text": "\r\r>>548157\r\rAlso not a real Q post\r\rQ",

"postDate": "2018-03-04T11:19:47",

"time": 1520180387,

"tn_h": 0,

"tn_w": 0,

"h": 0,

"w": 0,

"tim": null,

"fsize": 0,

"filename": null,

"ext": null,

"md5": null,

"last_modified": 1520180387,

"sub": null,

"com": "<p class=\"body-line ltr \"><a onclick=\"highlightReply('548157', event);\" href=\"/qresearch/res/547414.html#548157\">>>548157</a></p><p class=\"body-line ltr \">Also not a real Q post</p><p class=\"body-line ltr \">Q</p>",

"name": "Q ",

"trip": "!UW.yye1fxo",

"replies": 0

}

],

"no": 544985,

"uniqueId": "35c759aa-4998-4009-83a7-2af1b3273f28",

"_unixEpoch": "1970-01-01T00:00:00Z",

"text": "\r\r>>548166\r\rNOT A REAL Q POST\r\rQ",

"postDate": "2018-03-04T00:17:27",

"time": 1520140647,

"tn_h": 237,

"tn_w": 255,

"h": 1114,

"w": 1200,

"tim": "ffd6128f5949e4d4f6f3480236a63be002ffc5e59c0a31714360624d8ce45170",

"fsize": 271479,

"filename": "B42CA278-6C32-4618-A856-0CB9B680CC38",

"ext": ".jpeg",

"md5": "CbsCGk0pVEahunzSuV4LKw==",

"last_modified": 1520140647,

"sub": null,

"com": "<p class=\"body-line ltr \"><a onclick=\"highlightReply('548166', event);\" href=\"/qresearch/res/547414.html#548166\">>>548166</a></p><p class=\"body-line ltr \">NOT A REAL Q POST.</p><p class=\"body-line ltr \">Q</p>",

"name": "Q ",

"trip": "!UW.yye1fxo",

"replies": 0

}

]

98bd4e No.550251

>>550148

Let me clarify, HTML for just the archive pages (to capture threads not in catalog/threads.json). JSON for everything in else.

I'm working on how to share it, currently unoptimized and around 6 GiB of data uncompressed.

07564d No.551411

>>550148

http:// archive.is/https:// 8ch.net/cbts/res/*

It doesn't look like archive.is does JSON. Your parser doesn't do HTML?

dbb4a4 No.553092

>>551411

Yeah I've dug thru all the html looking for a reference to a json file. Can't find a reference to one either. My guess is, that once it drops off the main thread catalog, the JSON is no longer available. Too bad because that's the meat in a simple format.

No the machine is more of a scraper (grab data and save it) than a parser. It does parse the HTML out of the .com field into .text like QCodeFag does though. It's not designed to read thru html pages to look for posts.

It has a local baseline archive of everything.It reads in that entire local and then figures out the json breads it needs to download from the 8ch/qresearch/catalog.json. Then it downloads all those new breads and resets itself so you don't download everything every time - only the breads from the past [x] days.

dbb4a4 No.553109

>>550251

You've got a database? I assume that's with all the images as blobs?

dbb4a4 No.554074

Here's an updated mini local JSON viewer as an HTML page + allQPosts.json. @225KB

I updated it so it works with the raw json from 8ch.

https:// 8ch.net/qresearch/res/553655.json

Could probably use an [ascending/descending] button but…

Includes all QPosts up to 2018-03-04T11:29:14

https:// anonfile.com/z4U1Jdd9b9/Mini_Local_JSONViewer.zip

If folks don't like a zip, it's only 2 files they can download the HTML file (ChanScraper) and the allQPosts.json (Console\bin) file on github https:// github.com/QCodeFagNet/SFW.ChanScraper

07564d No.554309

>>553109

My images are kept as separate files in original form. Only the links are kept in the database. Here's the record definition for MySQL:

CREATE TABLE `chan_posts` (

`post_key` varchar(31) NOT NULL COMMENT 'site/board#post (post is set to length 9 with . fill.',

`thread_key` varchar(31) NOT NULL COMMENT 'site/board#thread (thread is set to length 9 with . fill.',

`post_site` varchar(19) NOT NULL COMMENT 'For editor post, use editor. For spreadsheet, use sheet.',

`post_board` varchar(15) NOT NULL COMMENT 'For editor post, use editor. For spreadsheet, use sheet.',

`post_thread_id` int(10) UNSIGNED NOT NULL COMMENT 'For editor post, use 1. For spreadsheet, use row.',

`post_id` int(10) UNSIGNED NOT NULL COMMENT 'For editor post, use next available. For spreadsheet, use column converted to number.',

`ghost` int(10) UNSIGNED DEFAULT NULL,

`post_url` text,

`local_thread_file` text,

`post_time` datetime NOT NULL DEFAULT CURRENT_TIMESTAMP,

`post_title` text CHARACTER SET utf8 COLLATE utf8_unicode_ci,

`post_thread_title` text CHARACTER SET utf8 COLLATE utf8_unicode_ci,

`post_text` text CHARACTER SET utf8 COLLATE utf8_unicode_ci,

`prev_post_key` varchar(31) DEFAULT NULL,

`next_post_key` varchar(31) DEFAULT NULL,

`wp_post_id` int(11) UNSIGNED DEFAULT NULL,

`post_type` set('editor','q-post','anon','approved','high','mid','low','irrelevant','timeline') NOT NULL DEFAULT 'anon',

`flag_use_in_blog` tinyint(1) NOT NULL DEFAULT '0',

`flag_included_on_maps` tinyint(1) NOT NULL DEFAULT '0',

`flag_included_in_bread` tinyint(1) DEFAULT NULL,

`flag_bread_post` tinyint(1) DEFAULT NULL,

`flag_relevant_img` tinyint(1) DEFAULT NULL,

`flag_relevant_post` tinyint(1) DEFAULT NULL,

`author_name` text,

`author_trip` text,

`author_hash` text,

`author_type` smallint(6) DEFAULT NULL,

`img_files` json DEFAULT NULL,

`link_list` json DEFAULT NULL,

`video_list` json DEFAULT NULL,

`editor_notes` text,

`tags` text,

`people` text,

`places` text,

`organizations` text,

`signatures` text,

`event_date` datetime DEFAULT NULL,

`report_date` datetime DEFAULT NULL,

`timeline_title` tinytext

) ENGINE=InnoDB DEFAULT CHARSET=utf8;

ALTER TABLE `chan_posts`

ADD PRIMARY KEY (`post_key`),

ADD KEY `post_id` (`post_id`),

ADD KEY `thread_key` (`thread_key`),

ADD KEY `site_board` (`post_site`,`post_board`);

I'm considering making the database publicly available. I need to figure out how much space it will take up and whether it will fit within my current hosting plan. At present, I have over 880,000 posts in the database. The size of the database file for just this table without the images is 1.1GB. There's another GB for images of Q posts, but this is only the fraction that is Q posts, bread posts, and for the context posts related to these.

07564d No.554376

>>554309

I guess I should start uploading. I've got the unlimited plan. Anyone want to write the search feature for it? Preferred language is PHP.

98bd4e No.555095

>>553109

For now it just uses a dedicated file system.

With images gathered so far this mirror's total size is 193 GiB.

dbb4a4 No.560076

>>555095

holey phuck. 193 GB. That's for a full archive of all breads + images? My local scrape of Q breads and posts as text only comes in at 6mb. My local QCodeFag install with text + Q images is just under 100mb.

193GB is getting unmanageable.

98bd4e No.560415

>>560076

Yes, unoptimized and incomplete.

07564d No.564762

>>560076

Not unmanageable. Just big. Maybe every thread needs its own directory for its images. And maybe the data needs to be moved to my other drive locally.

07564d No.564862

I'm working on the export files now. I need to change the posts just a bit before I can make them public.

I promised that no links would go to 8ch and particularly qresearch, and also that I would redact mentions of them from the content. I already do this on my blog, but I simply broke the links rather than made them go somewhere else. To get the most out of the republishing of the posts, I need to convert the >> and >>> links so that they link to posts stored on my own site. This is probably better anyway since many posts and threads are now missing from their original locations.

dbb4a4 No.568187

>>564762

Yeah it's not totally unmanageable. It's more like moving a full grown oak tree. You can do it, but it's a huge pain in the ass. I was thinking more in terms of moving it around the internet or hosting. That's a pretty big db.

I rejiggered the ChanScraper to archive all the breads even if there isn't a Q post in that bread. It rendered 215 NEW complete breads and brought my jason net filesize from 6MB to 200MB. Starts around "Q Research General #358".

That's with no images, just the raw JSON from 8ch. Each bread is around 700kb.

98bd4e No.568666

>>568187

I did some research on collecting the CBTS threads from 4chan/pol the other night and the results might be useful for others. They can be found at the bottom of the page here:

https:// anonsw.github.io/qtmerge/catalog.html

It's still a work in progress.

dbb4a4 No.568861

>>568666

>anonsw.github.io/qtmerge/catalog.html

I may be able to give you an list of all those links from the data I have from QCodeFag

dbb4a4 No.569061

>>568666

>anonsw.github.io/qtmerge/catalog.html

nevermind looks like you got it covered. nice!

07564d No.569170

>>568187

Yes, the breads are essential. I've got them going back all the way through 4chan stuff. The breads are how you connect in the answers. If you connect up the contexts, most of them link back to a Q post at some point. Then the context of that post that was linked into the bread can be associated with the Q post. That is what I was working on before I started looking at making my entire database available for research.

98bd4e No.569329

>>569170

Were you able to capture any of the original 4chan JSON/HTML data? I wasn't researching Q at that time so I've relied on 4plebs.

d6b0f8 No.569596

>>494745

I have created a searchable application for /qresearch/.

The database is filling right now. I kept only the image attachments in order to save hard disk space.

At present 52,000 of the most recent posts on qresearch are loaded in the table with the attachments. We'll see how the storage works out.

I'll advise when anons can attempt to use the system.

07564d No.569793

>>569329

I've got most of it, yes.

07564d No.569900

>>554309

I don't know if y'all noticed, but I've got several columns in my database that are not part of the original data. Some of these are tagging fields: `tags`, `people`, `places`, `organizations`, and `signatures`. It would be difficult to automate the filling of these fields, but I don't want to entirely open up editing of these fields to anons, either, due to the potential of clown interference. There's no way I can fill all of them in myself. I have an idea to allow tags to be suggested and then allow up-voting and down-voting and coming up with an acceptance criteria before giving them a permanent place in the data record. Or maybe just leave them in that form with their ratings.

98bd4e No.570074

>>569793

Excellent. Will that raw JSON data be in the DB as well?

>>569900

I did notice, those are great ideas. Can I suggest letting each user have their own copy/edits of the metadata? The user-specific data could then feedback into the system for suggestions to others, etc. But primarily it gives the user some way to control the interference/noise.

dbb4a4 No.570566

>>570074

What JSON are you looking for anon? Bread before 2/6/2018?

dbb4a4 No.570604

I've rejiggered the ChanScraper to produce TwitterSmashed json. It includes any DJTweets within 60 mins of a Qpost. Here's what a [5], [8], [10] deltas look like.

{

"DJTtwitterPosts": [

{

"accountId": "realDonaldTrump",

"accountName": "Donald J. Trump",

"tweetId": 944665687292817415,

"text": "How can FBI Deputy Director Andrew McCabe, the man in charge, along with leakin’ James Comey, of the Phony Hillary Clinton investigation (including her 33,000 illegally deleted emails) be given $700,000 for wife’s campaign by Clinton Puppets during investigation?",

"delta": 5,

"link": "https:// twitter.com/realDonaldTrump/status/944665687292817415",

"uniqueId": "00e6951d-5f49-455b-bdd9-bda7f184d9c7",

"time": 1514060825,

"_unixEpoch": "1970-01-01T00:00:00Z",

"postDate": "2017-12-23T15:27:05"

},

{

"accountId": "realDonaldTrump",

"accountName": "Donald J. Trump",

"tweetId": 944666448185692166,

"text": "FBI Deputy Director Andrew McCabe is racing the clock to retire with full benefits. 90 days to go?!!!",

"delta": 8,

"link": "https:// twitter.com/realDonaldTrump/status/944666448185692166",

"uniqueId": "92fbb1a2-169e-412c-abba-6e441d3acbaa",

"time": 1514061006,

"_unixEpoch": "1970-01-01T00:00:00Z",

"postDate": "2017-12-23T15:30:06"

},

{

"accountId": "realDonaldTrump",

"accountName": "Donald J. Trump",

"tweetId": 944667102312566784,

"text": "Wow, “FBI lawyer James Baker reassigned,” according to @FoxNews.",

"delta": 10,

"link": "https:// twitter.com/realDonaldTrump/status/944667102312566784",

"uniqueId": "eabb202f-3b59-48c9-b282-f0110b8388a5",

"time": 1514061162,

"_unixEpoch": "1970-01-01T00:00:00Z",

"postDate": "2017-12-23T15:32:42"

}

],

"no": 158078,

"name": "Q",

"trip": "!UW.yye1fxo",

"sub": null,

"com": null,

"text": "SEARCH crumbs: [#2]\nWho is #2?\nNo deals.\nQ\n",

"tim": null,

"fsize": 0,

"filename": null,

"ext": null,

"tn_h": 0,

"tn_w": 0,

"h": 0,

"w": 0,

"replies": 0,

"md5": null,

"last_modified": 0,

"source": "8chan_cbts",

"threadId": 157461,

"link": "https:// 8ch.net/cbts/res/157461.html#158078",

"imageLinks": [],

"references": [],

"uniqueId": "e22306cc-2831-453a-ae1d-16e90aa23707",

"time": 1514060541,

"_unixEpoch": "1970-01-01T00:00:00Z",

"postDate": "2017-12-23T15:22:21"

}

98bd4e No.570634

>>570566

4chan JSON for pol between 2017-10-30 and 2017-12-01.

dbb4a4 No.570660

>>570634

I'll keep my eyes peeled. Finding old JSON for those days is hard. Is 12-1 when you started archiving? Got bread json < 2-6-2018?

07564d No.570766

>>570074

I could develop an export, I suppose. But that's low on my list of priorities at the moment. The data structure is above in the list. Minor alteration needed: My host does not support JSON fields. Substitute TEXT, and you should be good. If you want to write an exporter, I can review it and include it.

But I still don't have the data up there yet. I'm working on the alterations to the data needed to keep everything on site at the host.

07564d No.570809

>>570074

I was thinking of attaching the IP address to each suggestion to keep the up-votes and down-votes honest. Is that enough? Or maybe even too much? The other thing I could do is perhaps tie in the WordPress login system, since it's there anyway. It might take a bit of time for me to figure out how to limit permissions.

98bd4e No.570874

>>570660

Thanks, 4plebs is good for now, but a second witness is preferable. Started archiving Feb 15, but some old data was still available at the time.

For 8ch these are the oldest breads I have:

pol: 10509790 (2017-08-28)

cbts: 10 (2017-11-21)

thestorm: 1 (2018-01-31)

I don't have all breads after though, it is incomplete.

I've since stopped archiving pol/cbts/thestorm to save time/space.

98bd4e No.570944

>>570809

Not enough due to VPNs, DHCP, etc. The login may be the best way.

dbb4a4 No.570983

>>570874

I think you and I started archiving those about the same time. I've got complete json breads from 2/6/2018 to now. if you want any of that.

98bd4e No.571020

>>570983

I might already have it, is it in the QJsonArchive.zip from earlier?

dbb4a4 No.571161

>>571020

Ya - you probably have the breads from the last few days eh?

98bd4e No.571229

>>571161

I do, I'll call your dataset QCodeFagNet unless you want a different name. Instead of the zip I'll pull it from your github.

dbb4a4 No.571310

>>571229

Sounds fine. I'll try to keep it updated.

07564d No.575021

>>570944

Logins require email addresses. I guess it's always a choice whether to participate.

d6b0f8 No.583035

>>494745

Q Research General - searchable archive breads 716-477 presently online.

www.pavuk.com

username qanon

password qanon

updates as I find them

70e498 No.596604

6a9543 No.598094

There so much content being produced now that it should be compiled into a wiki in a dedicated thread. The other threads investigate and make the content, this one adds the best content into one big archive, updated in real-time ofc bc they never stop why should we pic related.

BUT WHY

To take Q's work to the next level we have to increase the public's basic awareness of the criminality being exposed, investigated, and terminated, by an order of magnitude. That order of magnitude is pretty normal people.

>be a normal person

>want to do the right thing but get a link to this Q thing and there's too much complex and """scary""" info what with muh job and family and everything else

>the big load of content is overwhelming and i don't know where to begin and have it be easy

<make 1 entry point to begin browsing the entire body of accepted content

<terse organization keeps it brief and saves the details for a leaf page a click away, as deep as is necessary

<keep source of body of accepted content continuously up to date

<using https for minimal integrity protection

>now i can begin a review of the evidence contained in the case file archive with a single click! jeff bozos eat your heart out nigger

>and look at short well-organized and sourced text, and pictures, and the odd video

>and easily get a run down on whatever topics i browse my way upon

>and now even though my eyes have been opened in a pretty dramatic way, it was easy to use and i know it'll be easy to share, to the topic level

70e498 No.602595

>>598094

I hear you anon.

The key is the content. We have the ability archive threads/qposts. Posts that Q references. Tweets. Known tripcodes/twitter accounts.

What is the source of all the evidence? The dedicated research threads? Notables? In order for it to be automagic, there needs to be a reliable single source here on 8ch. None of the codefag work I've seen reaches a level of what could be called AI - or the ability to discern which anon has posted a certifiable answer/evidence.

Non automated means anonomated, but that causes it's own set of issues.

I agree a wikipedia style thing would be good because it's familiar, but populating it with data may be an issue. Some of it's going to have to be entered in manually.

If all you are looking for is a location for an anon wiki, I think that's pretty easy.

6a9543 No.603402

>>602595

No, not automated, curated.

98bd4e No.603568

>>571310

Should I hit _allQPosts.json?

07564d No.605608

I'm stuck. I'm working on getting that database up for you, but I have to make some modifications to the `post_text` field so that those links don't come here to 8ch. (I promised that I wouldn't do that.) I'm trying to fix the `post_text` field so that the >> links refer back into the database, but I'm not familiar enough with the DOMDocument and related classes in PHP. Are there any good tutorials out there on how to do advanced manipulation of HTML using these classes? The reference manual stuff just isn't doing it for me.

07564d No.605926

>>605608

I should clarify something. Not only am I going to make the existing links self-reference, but I'm also going to revive those dead >> links and point them back into the database. I've got many of the deleted threads in my database, too, and I can make those available.

70e498 No.612945

>>603568

Ya that's fine. I'm going to update that today to cover the latest.

I've been working on a new local viewer that uses the twitter smashed data. It shows the delta + alt text of the tweet + a link to the tweet. I've noticed that alot of the image links I have a currently broken. I was thinking I'd just update those to point to one of the other QCodeFag branch archives rather than try and archive all the images as well.

Expect an update on GitHub later

70e498 No.613236

>>612945

Here's what it looks like. Just trying to finish off a sort idea and clean data.

07564d No.613641

Good news! I've got the code working which makes the post links compliant and refer back into the database. Almost as soon as I posted the request, it came to me that I was making things more complicated than they needed to be and a better algorithm came to mind. The algorithm is so good that in cases where good posts didn't link in 8ch, they will be linked on my site. That includes links such as the one Q pasted into the middle of a word the other day or when they are consecutive with or without comma or white space. Anywhere there is a >> followed by a bunch of digits, a link should be created. The only exception is where the post number of the link is greater than the post number of the current post. This type of error was encountered in early posts after the transition from one board to another. Anyway, I'm going to run a few more quick tests, and then I should be uploading to my host within a few hours. I still don't have code ready to search it, though.

70e498 No.613892

>>613641

When you get that worked out make sure to let us know. I've been wondering about that myself. The early halfchan no's are pretty big. I've found some bugs in my code around there being multiple references per Q post. It does happen on occasion and my scraper isn't catching them all.

I've just uploaded a bunch of json data to the https:// github.com/QCodeFagNet/SFW.ChanScraper/tree/master/JSON gihub. The json folder is what's generated when you run the ChanScraper, the smash folder when you run the TwitterSmash. Each of those folders has a Viewer.html file that can be used with just the _allQPosts.json or _allSmashPosts.json.

Like I said I need to clean up some dead image links for everything to be working right.

07564d No.618146

>>613892

You MIGHT be able to get thumbnails from archives, but you won't get full size images there, for the most part.

70e498 No.620330

>>618146

Ya think it's bad form to go lazy and link em to one of the qcodefag archives?

07564d No.622768

>>620330

Part of making those offline archives is storing the items. Plus, don't assume any platform is forever. There are too many clowns out there who don't want anyone to see this stuff.

So now I've got a bunch of export files of my database ready to upload. Next challenge: Automating the import on the hose.

07564d No.622903

>>622768

>import on the hose.

Do clowns alter typing?

07564d No.625024

The table of posts has been added to the database. It's all up there. (All I have, anyway.) I need to get a way to make searches available to you now.

70e498 No.632885

>>625024

So you have all the breads searchable as well?

07564d No.648528

>>632885

Everything is searchable. The database includes all posts I could find. I'm working on the search front end right now.

07564d No.648594

>>632885

This is what the front end looks like right now. I'm working now on turning that into a SQL statement that can search the database. I'm only an hour or two from putting this online.

07564d No.649300

>>648594

It's up there. The paging isn't working yet, so don't anyone complain about that. I'll fix it in the morning. I also discovered that a key range of posts didn't import properly. I'll fix that in the morning, too. For now, I've set the posts per page to 2000, which may cause timeouts, but it will allow people to play with things a bit.

http:// q-questions.info/research-tool.php

cc8139 No.649479

>>648594

ANON, great work.

70e498 No.650810

>>648594

HOLEY FUCK YES.

This crosses all breads? If so then this is exactly what we need. I can help you with the SQL if you need it.

SELECT * FROM tbl LIMIT 5,10; # Retrieve rows 6-15 you should also specify an ORDER BY

>>649300

How are you getting the breads? Maybe I can work out a way to get you those. Combine up somehow

70e498 No.651528

>>509646

I've been thinking about this. Preliminary research shows that elasticsearch and lucene would probably be the best match for what we've got. There are alot of tools that pile into elasticsearch. Any hostfags here with the ability to set up an elasticsearch node?

The data is big. Tons of images. A proper archive takes space. I'm holding @546 complete breads and with no images it's 250MB+. That's for like a month. By the end of the year the bread collection alone is going to be over 1.5GB.

The images I've got so far is around 100MB, but that's just from the Q posts - and even then I know I'm missing some.

Econ Godaddy hosting is like $45 a year. I'm thinking about just putting the chanscraper/twittersmash online, then write some simple apis. Get thread#, filteredThread, qpost# that kind of thing. Useful or no?

07564d No.652644

>>650810

My algorithm for getting breads is this:

1. Get the author_hash for the first post in a thread.

2. Mark the first posts in the thread that match that author_hash until the author hash doesn't match.

If someone jumps in before the baker is done, oh well. But that shouldn't be much of a problem because the breads get repeated a lot. I can mark posts as bread later, if need be.

70e498 No.654567

>>652644

Hmm… When I say bread I mean a full Q Research thread. Like this

https:// github.com/QCodeFagNet/SFW.ChanScraper/blob/master/JSON/json/8ch/archive/651280_archive.json

That's the straight bread/thread from 8ch. It includes all the responses whether the BV posted it or not.

I'm finding those by getting the full catalog from

https:// 8ch.net/qresearch/catalog.json, finding the breads/threads that have q research, q general etc in them, and then getting the json for that thread only from https:// 8ch.net/qresearch/res/651280.json

I think I see what you are doing - going thru and trying to mark the relevant posts?

07564d No.654852

>>654567

I haven't even looked at at that.

Paging is fixed, plus I gave you a couple other search parameters.

I'm still working on the import issue, but I at least have put the posts I initially identified as missing up there.

07564d No.654901

>>654567

>I think I see what you are doing - going thru and trying to mark the relevant posts?

Yes. Most of it is done automatically. Since I save the marks in the post records, I can go back in there and adjust it, if necessary.

5f9a22 No.663255

>>651528

>Useful or no?

I'm not the guy to ask. The discussions here went over my head immediately. Looks like there's some serious progress being made here:

>>648594

>>649300

One question I have for contributors here is when there is a consensus that you have created a viable search tool, how will you manage promulgation? Do it like a war room announcement on qresearch?

As many have noted, the search tool has to be hardened against tampering before release. Clowns/shills are devious and destructive.

70e498 No.664801

>>663255

I agree on shill proofing.

I've been playing around with a webAPI. I've got it working nice with all the q posts, looking for a specific post# like #929, and posts on a day. Returns json or xml. This is the Crumb Archive.

My plan is to expand that so that the archived breads can be accessed as well - each as a single json file. This is the Bread Archive.

I'm going to set it up where it's an autonomous machine. It will scrape and archive automagically moving forward from the current baseline. No delete. No put. No fuckery.

I'm pretty sure it would with the QCodeFag scraper repos.

The bread archive is pretty big. I'm sure there's no way I can archive images for all the breads. An image archive isn't what I've been focused on. The focus of this is only making the json/xml available from the chanscraper.

Once I can get the breads all up and being served automagically my plan is to set up an elasticsearch node and suck all the breads in.

I figure a year of godaddy hosting is currently $12 with unmetered bandwidth. I'll throw in.

07564d No.664904

>>664801

Yes, I'm concerned about that, too.

Perhaps it helps that this data does not reside only there?

In this case, it would take me about half a day to get it all up there again, if need be.

d6b0f8 No.664928

>>494745

Searchable Qresearch

www.pavuk.com

username: qanon

password: qanon

Updated regularly with the messages and images from Qresearch general.

07564d No.664960

I'm beginning to wonder if I'm up against some kind of limit on my remote host. I just tried importing into it again, and I'm still missing some posts.

Remote host: 1,010,127 records

Local machine: 1,049,610 records

d6b0f8 No.664975

I'm using the 8chan JSON API endpoints. I still need to pull from the archive.json file downloaded yesterday.

My server is on a linode so I have fast response time.

07564d No.664984

>>664960

Maybe I can split the table into 4chan and NewChan (my name for 8ch, since we can't link back to here) and see if they all go up.

d6b0f8 No.664990

You can search the text is the posts with wildcards. Say you want all posts with the word BOOM. Just enter *boom*.

Say you want the posts from Q with his tripcode and "boom"

Put !UW.yye1fxo in the trip code.

put *boom* in the comment

Click search button

voila.

d6b0f8 No.664998

Has anyone found a way to go back past the 25 pages in the console.json?

d6b0f8 No.665010

>>570604

Can I access this? I'd like to add the DJT tweets into the database. Twitter is wanting more and more data before they give me an API key.

d6b0f8 No.665054

U.POSTS.NEW is the new-format table.

U.POSTS.NEW.ATT is the table of attachment for the primary table. Each one is a link to a binary

5f9a22 No.666471

>>664990

Wow, awesome job! I knew it could be done. I'm going to need some help getting started. Could you put a qsearch for dummies tutorial together?

Did you have to create, or did this create a chronological list of all Q related threads and their titles if any? (/pol/cbts/CBTS(8ch)/The Storm/ qresearch)?

That might be a good Mnemonic to speed searches.

ee0f91 No.666874

how about:

-archive threads as they go

-convert to text files, with links to posts

-txt files are easily searchable

d6b0f8 No.666959

>>666471

I've not been back into this thread for a while. I'm running the qresearch import process to get up-to-date. One technique that is needed is to re-scan already imported threads for posts missed during initial scans.

Threads are imported from the catalog.json file. In this state, we know the thread number and the number of messages at that time. The only time we know a thread is closed is when the number of posts >= the number in the official "bake" count.

Therefore, my program keeps testing until the posts counter >= the bake counter and then marks the thread as complete in the thread table. This then prevents re-scanning all threads because we get only the open ones.

Multiple scans of posts are needed to get all of them and to deal with duplicate threads.

I use the 8-chan post number as part of the primary key to the threads and posts tables.

8GA_1 is 8chan Great Awakening post 1

8QR_655000 is 8chan Qresearch post 655000

The big problem is going back to find threads BEFORE the last 25 pages in the catalog.json. Therefore, I can't get anything earlier than when I first wrote the import.

d6b0f8 No.666983

The import routine uses the JSON API endpoint from the boards. In the JSON is the Unix timestamp of the message. This is a native field/object type in Pavuk. Thus all timestamps are set to UTC internally.

NOW, if I could get DJT's Twitter feed in JSON, it also has UnixTime and this goes in directly.

Twitter wants me to give them all sorts of documentation before they will allow me to use their API. Frankly, I don't have the time to deal with them or the inclination.

d6b0f8 No.666995

I can get other boards provided the endpoints are similar and that the catalog.json file still has links to the threads.

BO has never responded to my requests on how to get older threads.

d6b0f8 No.667022

Super simple.

Entry forms are also search forms.

Enter the data that you wish to match.

Click the search button.

Pavuk creates and then executes the appropriate query and returns the items in a Kendo grid. Scroll, resort, export to excel or click on a row to return to the entry form with your data.

*searching on timestamps has issues that i need to resolve*

d6b0f8 No.667026

d6b0f8 No.667075

The comments from the JSON API include markup and JS to go to real links. This is a problem with the storage and search. I pipe the comment string through Lynx with the -dump option and this gives me clean text in STDOUT and then a separator and then the list of actual links. I put the text in the comments and the links in a multivalue table. I'll expose the links tomorrow as a separate tab in the entry form.

70e498 No.667648

>>664984

What about 100k transactional batches?

5f9a22 No.667776

>>667075

Jesus Einstein, give us a starting point to keep up.

70e498 No.667886

>>665010

Yeah man hit it. I've got a github here you can browse around.

https:// github.com/QCodeFagNet/SFW.ChanScraper/tree/master/JSON

json/8ch has the filtered/unfiltered bread and archives in it. smash has the twittersmashed posts. I've been getting my twitter data from http:// www.trumptwitterarchive.com/data/realdonaldtrump/2017.json, 2018.json

I set up a test for the webAPI twittersmashed posts here https:// qcodefagnet.github.io/SmashViewer/index.html

I'm getting close on having the webAPI thing finished up. Just running some more tests and then I should be ready to go.

70e498 No.667927

>>666983

Yeah you could mebbe use the smashed json from me. I've already done the unix timestamp on the trump tweets. All 8ch posts and Twitter posts dervive from the same Post base object with the unix timestamp built in.

70e498 No.667971

>>666995

I think that's because you can't really get them. There is an 8ch beta archive here, but all the Q Research threads dissappeared shortly after we started archiving them. Even then, those archives are straight HTML. It's of no use to me. AFAIK, once it slides off the main catalog, its pretty much gone. Some trial and error got me a few breads, but not many.

5f9a22 No.668375

>>666995

>BO has never responded

I'm not the board owner, just some schmuck who started a thread he thought was being overlooked. You folks are so far out of my ballpark all I can do is try to keep it inside the curbs of what my original intent was.

I'd like to see a list/catalogue/file of all Q "related" posts.

Aaand I'd like to see a list of post Q "related" posts across all platforms/threads made searchable. Plenty of focus on Q, we need the early digging and free association.

70e498 No.668958

>>668375

Interesting concept you have anon. You want to be able to search across ALL 8ch? Not just Q Research? By platforms are you talking 4ch/8ch? or 4ch/8ch/twitter/reddit/facebook…?

07564d No.670142

>>667648

The first time I uploaded, I batched them in by 1000.

The second time, I batched them in by thread. I'm not sure how well the LIMIT clause on the SQL works.

In any case, I may have a problem on both computers. I could have sworn I had over 1.1 million records the other night. (Not to worry. I still have all of the source.) The solution may be to partition the table. I won't have to rewrite any code, but it'll chunk the table's file down into smaller sections.

This should be interesting. I've never had to partition a table before. Apparently, newer versions of MySQL do it automatically. But until then, it's gotta be done.

07564d No.670158

>>668958

Mine has 4chan, too.

07564d No.670221

>>666995

If threads are missing, you have to look in archive.org/web or archive.is. Of the two, archive.org/web is better for scraping because the HTML code is about as close to the original as they can make it. I can actually use the same scraper program on it.

Since the stuff that is on archive.is is so different from the original, I will need to write a new scraper for those. On several occasions, the post was important enough that I rebuilt it by hand.

With either archive, you need to know the URL, which can be tricky sometimes. Just having the post number won't do it. You must know the thread as well.

Just thought of something: When I get threads from these archive sites, what time zone do they show? I believe my stuff is saving to GMT when I save a post directly from a chan site. I'm not sure what I'm saving when I get posts from these archives.

70e498 No.670279

>>670221

I would think the time is relative to the archive home timezone. That is, unless archive.x has done some wizardry to change the time zone it's pulling at to be the time zone of the user requesting the original archive. That would be more problematic - but you could still deal. It should be marked what time zone and then you convert into the unix timestamp.

70e498 No.670289

>>670158

The 4ch breads or the 4ch Q posts?

70e498 No.670304

>>670142

What are the chances it's hanging on a specific record? I see that all the time doing inserts. Bad data kills it off.

70e498 No.670317

>>670142

You could look into raising the timeout. Mebbe it's just such a long job that it's taking too long and timing out? https:// support.rackspace.com/how-to/how-to-change-the-mysql-timeout-on-a-server/

07564d No.670322

>>666995

Here's a hint for how to find the post a dead thread belongs to: Go to the earliest archive of the thread on which you found the link, which will usually be on the archive.is site. If you're lucky, the link was still live when the thread was archived. The other thing to do is search earlier posts that you already have to see if someone else linked the same post.

07564d No.670332

>>670317

Time out isn't the problem in this case. Since I'm working with small batches at a time, they're quite quick.

07564d No.670388

>>670289

I have the vast majority of both. Go check it out.

http:// q-questions.info/research-tool.php

After I resolve the table size problem (which is what I think the real problem is), I think it would be good to work some more on my contexting program. On my local computer, I've got it so that it can look back through the links and show all available context with the post. What I haven't done yet is copy that contexting information to a Q post's context when I find one in the backward linking. It'll be ridiculously easy once I set about doing it. Then, when a Q post is pulled up, all that stuff that linked back to it can show together with it.

70e498 No.670421

>>670332

Hmm. Yeah just doing some easy math I can see how you would have more than 1mm records. We're at bread 815+ something here and with 751 post each that over 600k here on 8ch alone.

You may be onto something with that. Is there a limit? https:// stackoverflow.com/questions/2716232/maximum-number-of-records-in-a-mysql-database-table

Looks like number of rows my be determined by the size of your rows.

70e498 No.670470

>>670388

>q-questions.info/research-tool.php

So much data. It's mind boggling.

07564d No.670518

>>670421

Yes, there may be a 1GB limit on the file size, and I'm right about there now. If I partition, I can get around that.

98bd4e No.670526

Below is the qtmerge modified raw dataset (text-only) as of 2018-03-14 02:07 UTC.

I'm putting this out in the hopes that it may be useful to others for ETL, mining, search tools, archiving etc.

Some notes:

* The data is a synthesis of the the qtmerge datasets: https:// anonsw.github.io/qtmerge/datasets.html

* For an idea of threads that are available see: https:// anonsw.github.io/qtmerge/catalog.html

* eventcache.json file contains the posts/tweets/etc in chronological order. The type attribute currently dictates the local object structure (working to fix this to be more clean)

* refcache.json contains the detected post cross references (this is a work in progress)

* The referenceID attribute is the "primary key" between the files

* Timestamps are Unix Time and time strings are US Eastern

Extracted size: ~850 MiB

SHA-256 sum: d6ed89da05c0b714fc66b04ca66a8d701456d882d5f128ee1cef26c8d2e22eb6

http:// anonfile.com/dazfO8d4ba/qtmerge-text-2018-03-15_05.18.37.tar.bz2

07564d No.670571

>>670421

That's just the general threads. When I started linking through the breads, I found that I needed many of the other threads, too. Most of those are smaller, though.

5f9a22 No.670617

>>668958

> You want to be able to search across ALL 8ch? Not just Q Research? By platforms are you talking 4ch/8ch?

Not all 8ch. Just 4 and 8ch Q related threads. Q has posted in but a small part of all of the digging (and bullshit) threads and much info is contained in those threads. /pol/ was a cluster until adopting the /cbts/ threads, but they shouldn't be too hard to round up and include in the searchable database.

In fact, I'd only include the qresearch general threads since the GA/qresearch reset. Add the digging/ancillary threads as possible. Most of the gold is in the general's IMO.

07564d No.670657

>>670617

The reason I'm pulling in other threads is because they get cited as notable posts. I'm not bothering with them unless that happens.

d6b0f8 No.672305

I can get the other boards and other threads, the issue is disk storage. Linode gives me a lot of bandwidth, but only a few gigs of disk until I change my plan with them.

d6b0f8 No.672334

The limit of an OpenQM hash file (table) is 16TB. When this becomes a problem, I can create a distributed file (table) by primary key. Say, put all 8QR in 1 portion, 8GW in another. Simply a way to have physical storage allocated

Pavuk session records are GUIDS. (don't worry, I'll purge anons out of the storage.) It was done because of commercial requirements for SOX and other audit compliance issues. Remember, I created Pavuk to build commercial apps.

The distributed file is built by using the first 2 bytes of the GUID from the primary key. Thus, it has component files:

00

01

…

FE

FF

Or 256 parts.

Theoretical table size:

256 x 16TB = 4096TB

www.openqm.com

d6b0f8 No.672344

>>670526

I'm going to look at your work.

d6b0f8 No.672421

>>670617

I tried 4chan/cbts/index.html and got a 404 yesterday

d6b0f8 No.672572

Brother Anons, I can find the IDs of the threads by using the search function on Archive.is. For example, research general #2 was post number 799. Once I know this, I can go back to 8chan and pull up the thread.

Sadly, I cannot get it with JSON. I only can get HTML. This means parsing the HTML.

This means a new string parser, but it goes into the same table as the JSON, but with more work. Here's what the posts look like in HTML

d6b0f8 No.672659

I've put out a tweet thread showing the progress and asking if someone will step up to help lead a crowdfunding campaign so I can afford a bigger Linode.

41bee9 No.672688

>>672421

>I tried 4chan/cbts/index.html and got a 404 yesterday

I'd expect that. Threads sunset there rather quickly. I think most everything from 4ch is in http:// archive.is/search/?q=%2Fcbts%2F

I got 22,900 hits. Some people used 4plebs and maybe even other archives. Need to know all of the archive sites used so we can add them to the soup.